Power Protect Data Manager 19.18 dropped earlier today. A fair bit to unpack but 3 main highlights for me. I hope to delve into more detail around these (especially around the exciting new anomaly detection feature) in future posts. In the meantime a quick overview:

1. NetApp as an explicit array type/NAS Asset Source

NetApp is added as an explicit array type that can be selected when adding a NAS asset source. This selection allows NetApp appliances to more easily integrate into NAS protection workflows.

This is pretty much hot off the press, but here is the link to the official NAS configuration guide where you can find more detail. You will need to register to view.

PowerProtect Data Manager 19.18 Network-Attached Storage User Guide

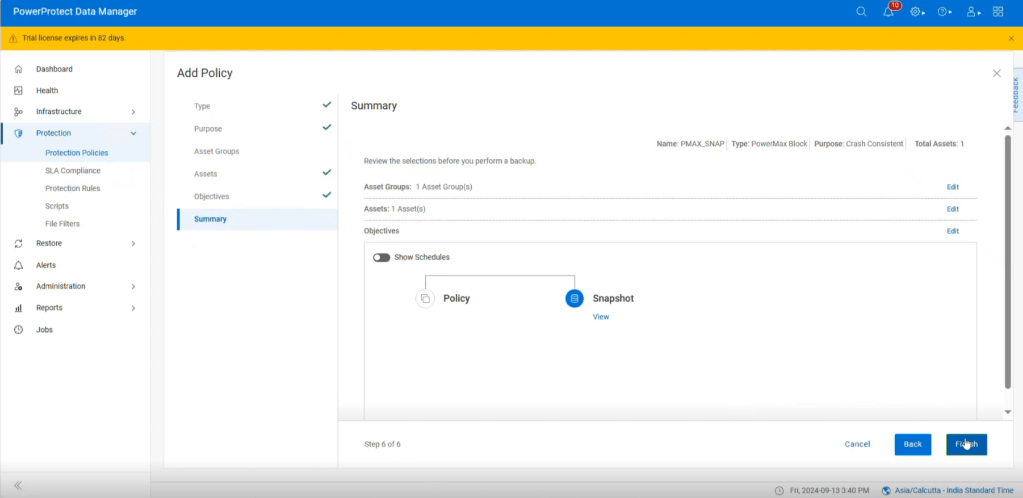

2. PowerMax Local Snapshot Management

Integrated PowerStore Snapshot Management allowing for the simple policy management for the creation and retention and deletion of array based snapshots has been available since PPDM release 19.14. Release 19.18, brings feature parity to the PowerMax array, and builds on the much tighter integration with PowerMax with Storage Direct introduced in Release 19.17. I blogged about this feature back in late July. Click here to view the blog on Storage Direct Protection.

Also check out the official documentation here:

Power Protect Data Manager PowerMax Administration Guide 19.18

Simple workflow integration:

Protection Policy executes on PPDM:

Snapshot created on remote PowerMax array:

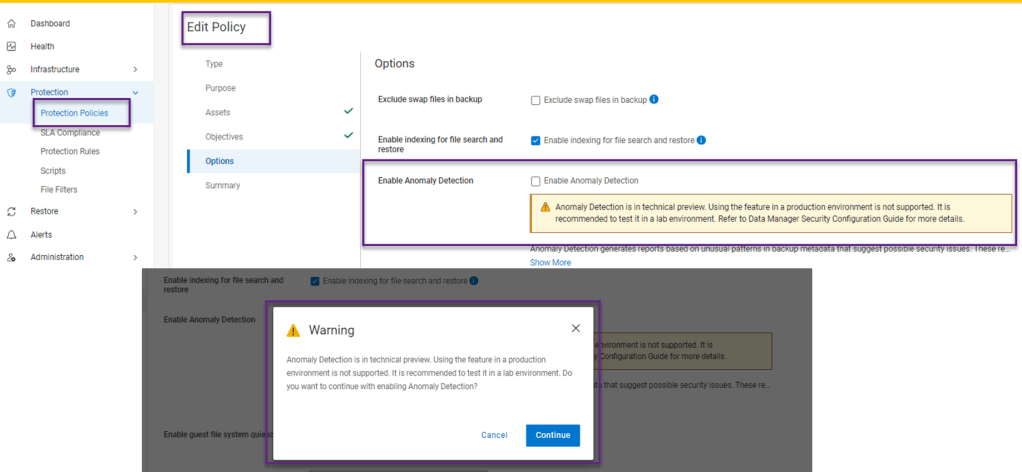

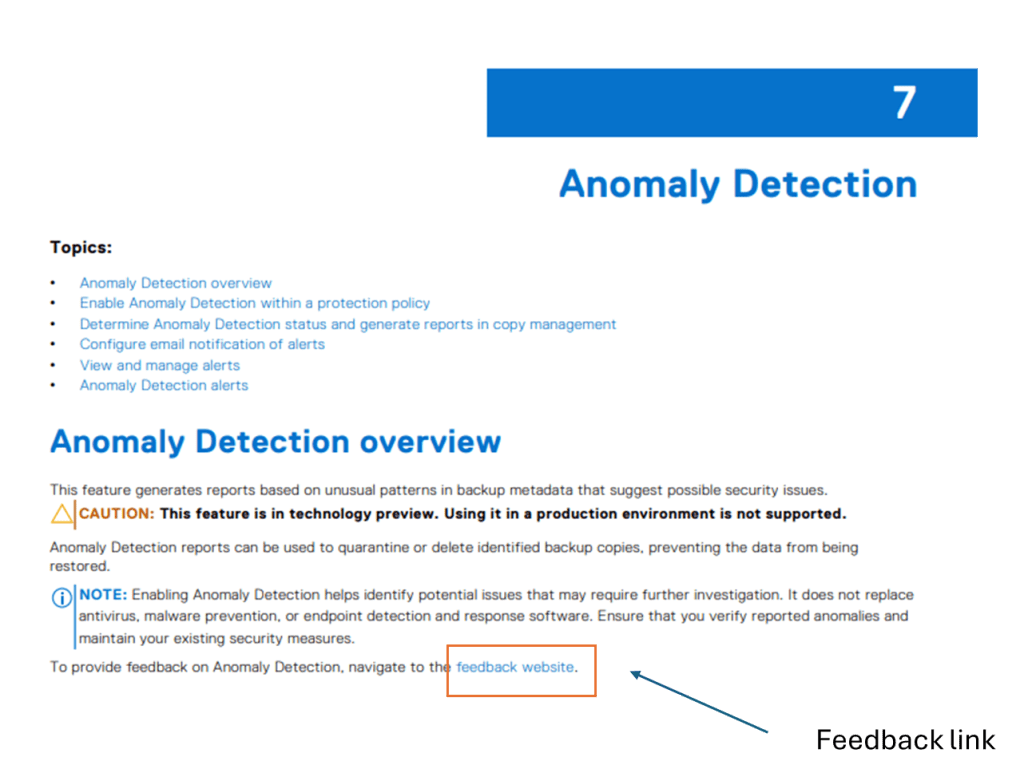

3. Anomaly Detection

Last but not least, This is the new feature that was introduced as a Beta in 19.17, but now in Tech Preview in 19.18. ( Tech Preview basically means that it is officially released but not yet ready for full production use just yet). I have included a link below, where customers can send feature feedback .

Anomaly Detection generates reports based on unusual patterns in backup metadata that suggest possible security issues. Whilst enabling Anomaly Detection helps identify potential issues that may require further investigation, it does not replace antivirus, malware prevention, or endpoint detection and

response software. Ensure that you verify reported anomalies and maintain your existing security measures. ( Really just stating the obvious here… defense in depth and all that !)

Its worth noting that this feature adds an extra layer of security to data without adding any additional licensing or cost. Yep it’s included in the existing license.

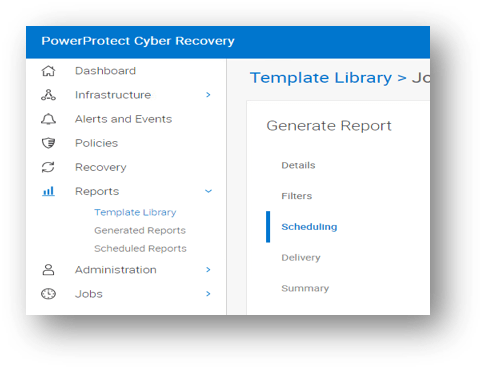

I’ve grabbed some screenshots from the latest release to give a ‘look and feel’ of this new functionality. As mentioned I will follow up with a more technically focused blog and demo.

Enabling Anomaly Detection within the Protection Policy Tab

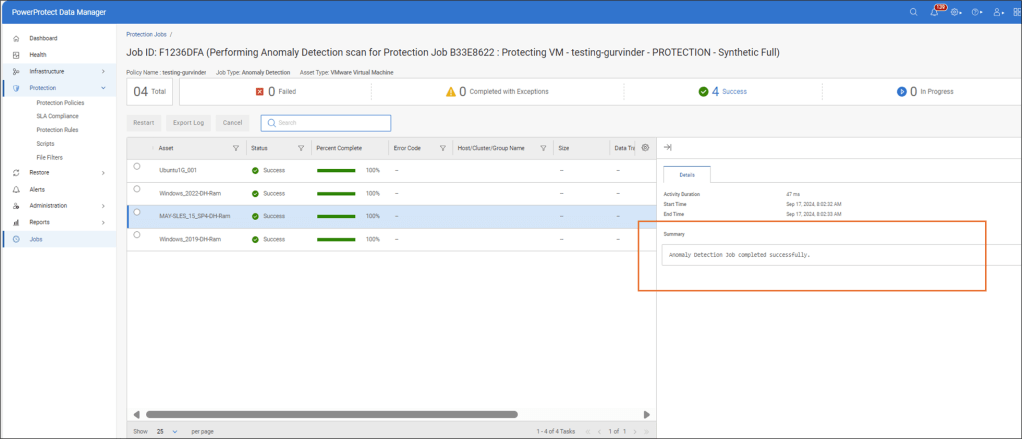

Completed Job with no Anomaly Detected

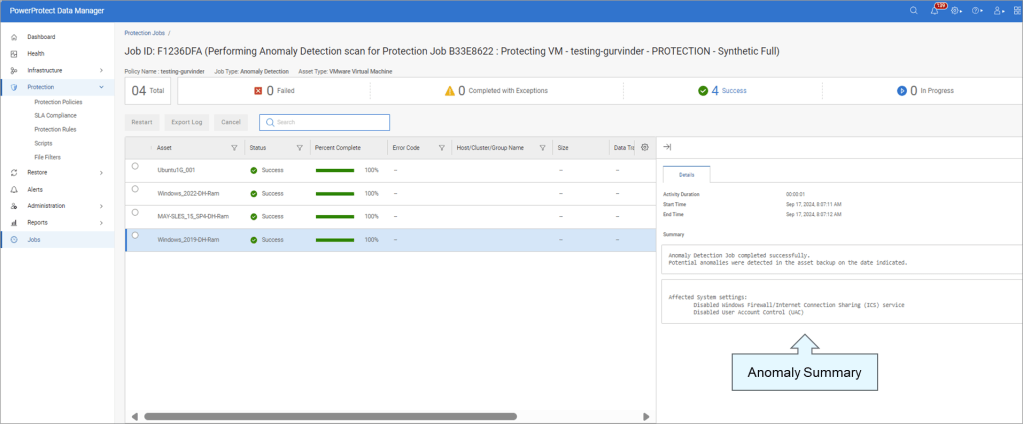

Completed Job with Anomaly Detected

Jobs View with Anomaly Detected

Critical Alerts View

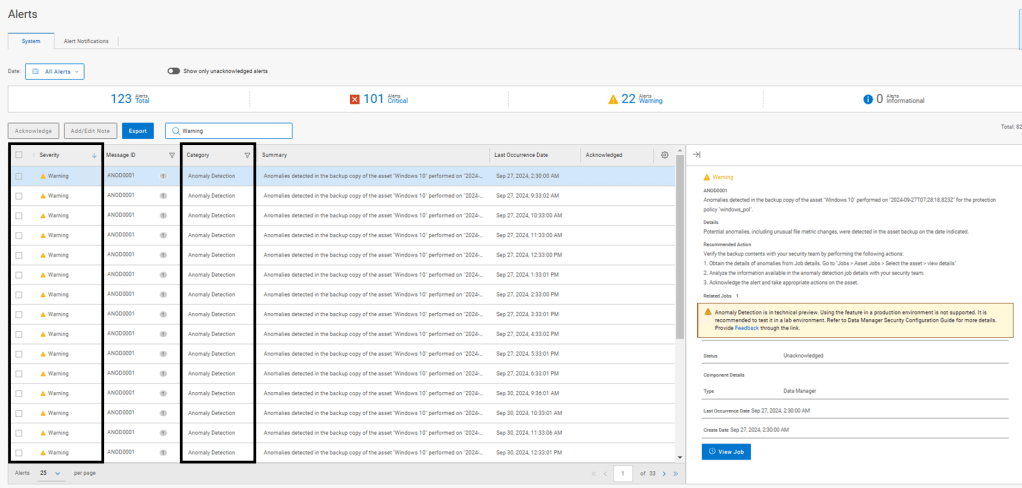

Anomaly Detection – Warning Alerts

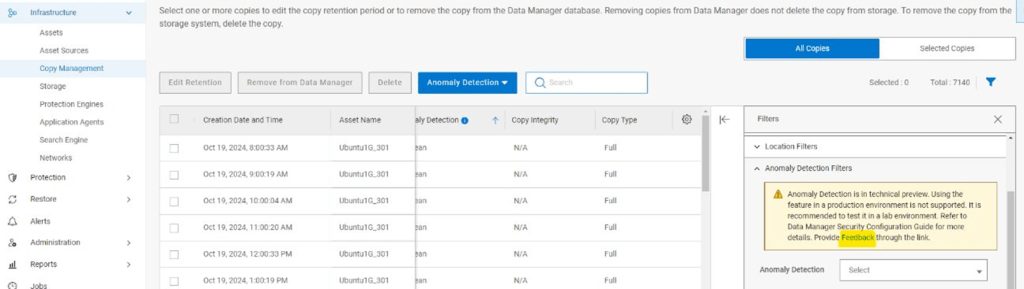

Copy Management View

Reporting View

Reports are available for download in case of suspicious copies.

Quarantine or Mark Copy Safe

Link to Provide Feature Feedback

As mentioned above, this feature is in Tech Preview. Please provide feedback via the following

Detail on feedback is also provided here in the Security Configuration guide.

Power Protect Data Manager 19.18 Security Configuration Guide

Lots of detail is included in Chapter 7 Anomaly Detection

I admit the link is buried in the documentation. It can be located at the following: Note: I say this documentation set as it potentially is subject to change:

Link to Anomaly Detection Feedback Site

Other Links:

Main link to Dell Support Website for software downloads, release notes etc.

Dell Technologies Infohub for Power Protect related info.

Stay tuned for deeper dive into the exciting new Anomaly Detection feature in an upcoming post.

DISCLAIMER

The views expressed on this site are strictly my own and do not necessarily reflect the opinions or views of Dell Technologies. Please always check official documentation to verify technical information.

#IWORK4DELL